Life Before Docker

If you want to learn about Docker, you need to first know about, what were the problems that people were facing before Docker came into existence. So let's get started.

Before the era of running multiple applications on a single server, the common practice was to dedicate one server to a single application. That means, only one application will run on one server. When more and more people started using the same application deployed on a single server, several challenges arose due to increased load and demand. This led to slower response times, If the server experienced downtime or required maintenance, the application would become inaccessible to all users and they didn't have a way to run multiple applications on the same server.

With the advent of VMware, this issue was solved by building technologies like virtual machines(such as dual booting). The need for provisioning dedicated servers for each application has significantly reduced. These technologies allow for running multiple applications on the same server, thus achieving better resource utilization and cost efficiency.

But there was a problem with VMs also. The biggest problem is Each VM runs a separate guest operating system, which consumes memory, CPU cycles, and disk space.VMs typically take longer to boot the machine. Indeed, while virtual machines (VMs) offered significant improvements over the practice of running only one application per server, they still had limitations and were not considered perfect.

Later on, 'Container' solved the issue, which was launched by Docker in 2013. So a Container is a package of codes, its dependencies, and also what is needed for the OS itself, which are packed into a single thing which is called an image. That image when it runs is called a container. If you have a container apps where if you created an image that works like this on your local box you can just put it in the cloud and that will run for you, it does not matter what we are running behind, what versions of Ubuntu or other OS we are using or what kind of VMs we are using does not matter because your containers have all the dependencies it needs to run.

Virtual Machine vs container

There is no such thing called which is the best. The choice between virtualization and containerization depends on requirements. Virtualization suits diverse OS needs and complex setups, while containerization offers better performance, scalability, and agility for cloud-native and microservices architectures.

Let's get started with Docker

Docker is a platform that helps developer to run, deploy, and scale containers. It ensures that your application will run in any environment.

Some Important parts of Docker

Docker Runtime: It consists of the container runtime engine, which allows us to start, stop, and manage containers.

There are 2 times

runc: runc is a lower-level container runtime, the role of this runtime is to work directly with the operating system and start and stop the containers.

conatinerd: higher-level container runtime that leverages runc and provides additional features and functionalities to manage the complete lifecycle of containers.

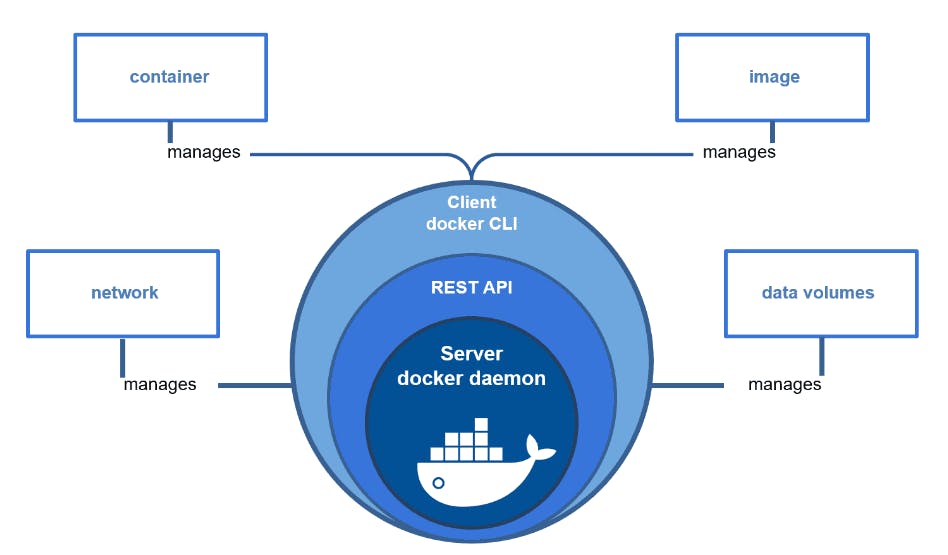

Docker Engine: It includes the Docker daemon, a long-running process that manages the building, running, and distributing of Docker containers. The Docker CLI (Command Line Interface) interacts with the Docker daemon to perform container operations.

Docker Orchestration: Docker Orchestration refers to the management and coordination of multiple Docker containers in a clustered environment. In the scenarios:

Scaling up: With Docker orchestration tools like Docker Swarm or Kubernetes, you can easily scale your application from 10,000 to 20,000 containers. You can define scaling rules, and the orchestration platform will handle the creation and distribution of additional containers to meet the increased demand.

Restarting containers: If 5,000 containers are ruined or experiencing issues, Docker orchestration platforms can detect their status and automatically restart them. They monitor the health of containers and can initiate restarts if containers fail or become unresponsive.

Updating containers: Docker orchestration tools provide features for seamless container updates. This allows you to easily deploy updates or new versions of your application across thousands of containers, ensuring smooth transitions and minimizing service interruptions.

Container Image/ Docker Image:

Imagine this scenario: You have a delicious dish in a bowl, and your friend Chethan lives in another country. Excitedly, you want to share the amazing taste with him. However, shipping the dish in a box won't work because it will spoil during the journey. So, what's the solution? Instead, you can share the recipe of the dish with Chethan. By doing so, he can recreate the dish on his own using the provided recipe. This way, he gets to experience the same wonderful taste without any spoilage. Similarly, Docker images serve as recipes for applications, allowing them to be easily shared and reproduced across different environments.

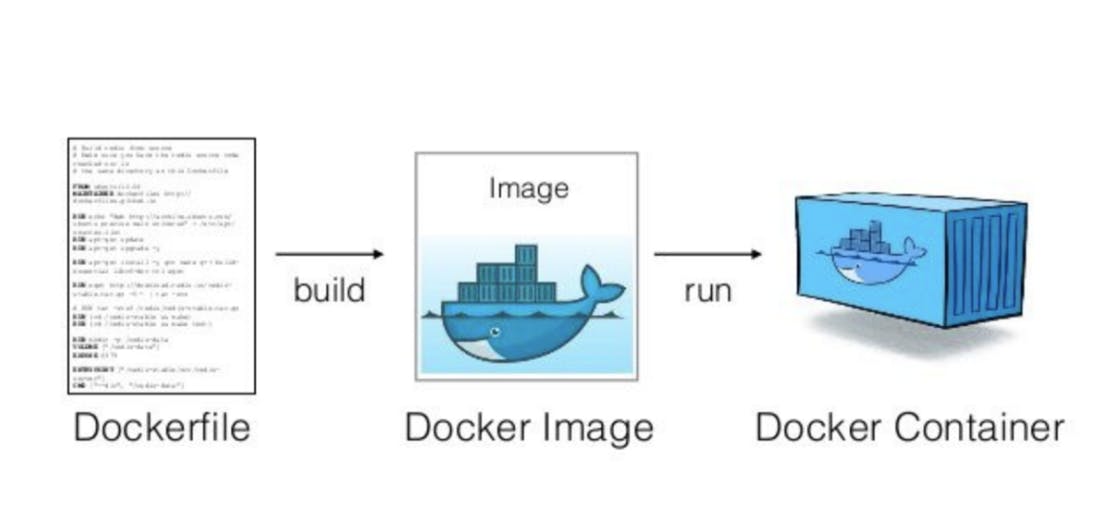

A Docker image is a lightweight, standalone, and executable software package that includes everything needed to run a piece of software, including the code, runtime environment, libraries, dependencies, and configuration files. It serves as a template for creating Docker containers.

To create these Docker Images there are Docker files. A Dockerfile is a plain text file that contains instructions on how to build a Docker image. These instructions specify the base image, dependencies, configuration settings, and commands needed to set up and run an application or service within a Docker container.

The Open Container Initiative (OCI) is indeed a project under the Linux Foundation. It was created to establish open standards for container formats and runtime environments, promoting interoperability and enabling different components of the container ecosystem to work seamlessly together.

At the time, there were several container runtimes available, each with its specifications and formats. This lack of standardization created compatibility issues and hindered the portability of containers across different platforms.

To address these challenges, the OCI introduced two main specifications:

Runtime Specification: The OCI Runtime Specification defines a standard API and execution environment for containers. It specifies how a container runtime should interact with the underlying operating system, including container lifecycle management, file system handling, process isolation, and resource management.

Image Specification: The OCI Image Specification provides a standardized format for container images. It defines the structure and contents of a container image, including the filesystem layout, metadata, and configuration parameters.

By establishing these specifications, the OCI aims to foster an open and vendor-neutral container ecosystem. It allows developers and organizations to build and deploy containers using different tools and runtimes, confident that their containers will work consistently across platforms that support OCI standards.

In conclusion, Docker's containerization technology has transformed the way applications are deployed, managed, and scaled. With its lightweight and efficient approach, along with the support of the Open Container Initiative, Docker has become a game-changer in the world of software development and deployment.